Hey, and welcome to the second update of the Brave New Teams newsletter.

Like trains of cars on tracks of plush

I hear the level bee:

A jar across the flowers goes,

Their velvet masonry

Emily Dickinson, The Bee

Emily Elizabeth Dickinson (1830 – 1886) was an American poet. Little known during her life, she has since been regarded as one of the most important figures in American poetry. Dickinson lived much of her life in isolation. Considered an eccentric by locals, she developed a penchant for white clothing and was known for her reluctance to greet guests or, later in life, even to leave her bedroom. Dickinson never married, and most friendships between her and others depended entirely upon correspondence.

Against that backdrop and somewhat contradictory, we will talk about very social creatures. Bees thrive in a complex system of countless interactions and a very elaborate division of labor (more on this later).

A quick update from SuperScript

The work on SuperScript continues at an accelerated pace. The foundation has been laid, the product-market fit is confirmed and the core optimization algorithm has been developed. Now, the implementation phase starts. The plan for the next few months is as follows:

- Launch a simple game to (playfully) illustrate the problem of assembling teams

- Develop (and launch) a web-based tool to allow users to write NLP-powered project descriptions (more on this later)

- Develop (and launch) a web-based tool to illustrate the inner workings of the agent-based model for teamwork (more on this later)

- Apply reinforcement learning methods to complement (or replace) the optimization algorithm behind team assembly

- Participate in pitching competitions

- Continue discussions with medium-to-large-sized organizations

- Define the MVP in terms of features, user flow, and technology stack

The purpose of SuperScript remains unchanged. It is to build optimal teams to solve complex problems. The optimization algorithm has been developed and produces the expected results (due to the multidimensionality of the problem, the algorithm is computationally expensive and relatively slow for large numbers of workers). However, the optimization step assumes that problems are well defined and that the required skill mix and the worker skill portfolios are completely known. These assumptions are not realistic. Therefore, we have to go back and build the missing puzzle pieces, namely steps 1 and 2.

The diagram below illustrates the high-level model setup. of SuperScript Step 3, the optimization algorithm is developed (and will later be enhanced with reinforcement learning to make it run faster and deliver solutions that converge faster to the global optimum). Step 2 is about finding the optimal skill mix for a given portfolio. To do this, pattern recognition techniques are employed, i.e., required skills of past projects are used to predict the required skill mix for the current project. Step 1 is about the classification of the problems and about understanding their (deep) structure.

The focus now is on step 1, the problem definition. We use NLP techniques to classify and enrich the text a user is entering to describe the problem.

What is NLP? Natural Language Processing (NLP) is a field in machine learning with the ability of a computer to understand, analyze, manipulate, and potentially generate human language. (You can find more details and a handful of well-known applications here).

In our context, NLP techniques are applied to free text fields to impose a structure and classify the problem by predicting relevant skill tags. The objective is to build a web-based tool that allows users to write better and more complete project (problem) descriptions. The tool will also produce a set of relevant skills that are likely required to solve the problem at hand successfully. To get an idea about how NLP-powered text classification works, you can play with the so-called zero-shot classification (that is, the task of predicting a topic that the model has not been trained on) here. It feels a bit like magic!

Emergent properties of teamwork

Let’s go back to bees. Intuitively, a honeybee colony is the archetype of a complex system. Bees interact in non-trivial ways. This paper uses an agent-based approach to evaluate the impact of local actions at the bee level on the global system. A similar system and its emergent properties are illustrated in this NetLogo model (to start the model, define the parameters, press ‘setup’, and then ‘go”).

If the bee analogy doesn’t resonate with you, one complex system everybody is aware of these days is a virus. You may want to analyze the emergence of the virus in this model by changing population density and transmissibility parameters.

Very much in this spirit, a few years ago, BCG published a thought-provoking report arguing that we need to transition from ‘mechanical’ management to one based on ecological and biological principles.

So, what are agent-based models? According to Wikipedia: “An agent-based model (ABM) is a class of computational models for simulating the actions and interactions of autonomous agents (both individual or collective entities such as organizations or groups) with a view to assessing their effects on the system as a whole. It combines elements of game theory, complex systems, emergence, computational sociology, multi-agent systems, and evolutionary programming.”

We have built an ABM for teamwork. Workers are assembled in teams to work on projects based on their (hard and soft) skills and availability. Emergence is analyzed at the individual worker level (workload, skills, training), the project level (interaction, project outcomes) as well as at the organizational level (workload, skills, training).

The model is used to test several hypotheses, understand emergent properties, and generate simulated training data for the Machine Learning (reinforcement learning) prototype.

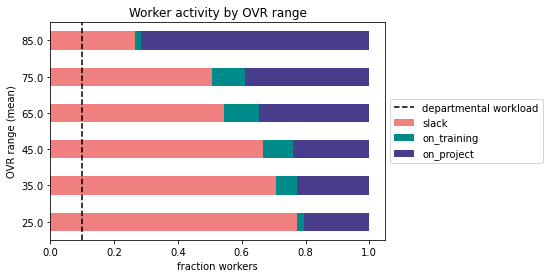

One interesting (and not entirely unexpected) emergent property of the ABM is ‘the-winner-takes-most-effect’. The higher the worker’s skill level (OVR), the more this worker is selected for (supposedly more interesting) project work. Participating in successful projects increases the skill level, and hence, those worker’s skill levels improve over time. Workers with very low skill levels get replaced more frequently, do seldomly get a chance to prove their worth (and to learn) in a project team, and are idle most of the time. For average-skill workers, training takes up a substantial portion of their workday, which, combined with project work, stabilizes the skill level over time.

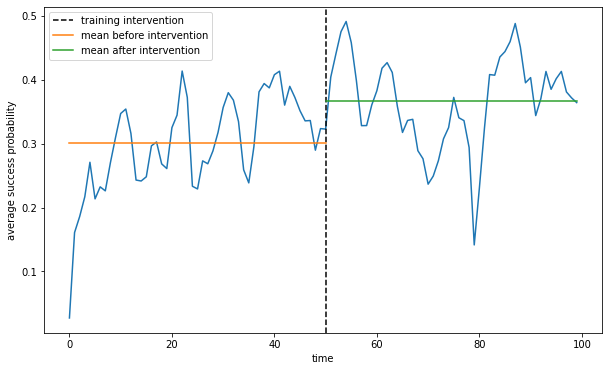

One way to mitigate ‘the-winner-takes-most-effect’ is targeted training. With knowledge about predicted in-demand skills, low-to-medium-skilled workers suitable for an “upgrade” can be targeted for training. Such a well-designed training intervention, here applied to the bottom half of workers for two in-demand skills, can significantly impact the overall (average) project success probability (it increases from 30% before to 35% after the intervention).

For the data science aficionados among you, the model specification is available here and you may access the full code on the GitHub repository here.

Diversification matters most

According to Wikipedia, “modern portfolio theory (MPT), or mean-variance analysis, is a mathematical framework for assembling a portfolio of assets such that the expected return is maximized for a given level of risk. It is a formalization and extension of diversification in investing, the idea that owning different kinds of financial assets is less risky than owning only one type. Its key insight is that an asset’s risk and return should not be assessed by itself but by how it contributes to a portfolio’s overall risk and return. It uses the variance of asset prices as a proxy for risk.” The intuition is that diversification in a portfolio of financial assets pays off because it reduces the risk for the same level of expected return or increases the expected return for the same level of risk. Not owning a well-diversified portfolio is a stupid idea and a risk that is not rewarded.

The very same principle also applies to teams. Very much in the Aristotelian spirit of “the whole is greater than the sum of its parts”, diversification (or “diversity” in HR-speak) matters. A team consisting of only workers with similar educational backgrounds, age, sex, and personality is not well diversified. It is (for most intents and purposes) just not a good team to have. The optimal team composition exhibits different skills but also a level of cognitive diversity. And similarly to portfolio optimization, workers described by their skill characteristics can be assembled into an ideal team using optimization techniques.

Endnote

Thank you for subscribing to Brave New Teams. There is a lot of talk about the future of work, the role of automation, and robots. The dystopian future is one where robots don’t replace humans but where they are becoming our bosses. As discussed in the post Automatic for the People, there is plenty of reason to remain optimistic about an augmented (and still human) future of knowledge work.

In the next newsletter, we will talk more about the results of the agent-based model and NLP-powered text classification. And much more!

(Note that there will be a separate announcement for the launch of the team assembly game.)

Stay safe, and don’t lose the script.